Query your own documents with LlamaIndex and Gemini

In this article I am going to explain about on creating application for indexing and querying your own documents using LlamaIndex and Gemini. I will provide step by step guide to create application in python.

Prerequisites for this example is as follows:

- Visual studio code

- Python

- Api Key of Gemini can be obtain from https://aistudio.google.com

Open visual studio code and create the file with name "demo.py". Now in visual studio code and go to terminal menu and click on New terminal link it will open new terminal. In terminal enter below command to install the LlamaIndex library and LlamaIndex gemini library in your machine.

pip install llama-index llama-index-llms-gemini llama-index-embeddings-gemini

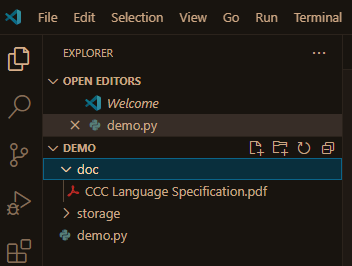

Create the folder named "doc" in root directory of the application as shown in below image and store the documents you want to query.

Now copy below code and paste in the "demo.py" file.

from llama_index.embeddings.gemini import GeminiEmbedding

from llama_index.llms.gemini import Gemini

from llama_index.core import Settings,SimpleDirectoryReader,VectorStoreIndex,StorageContext,load_index_from_storage

from llama_index.core.node_parser import SentenceSplitter

import os

os.environ["GOOGLE_API_KEY"]="your google api key obtained from aistudio.google.com"

gemini_embedding_model=GeminiEmbedding(model_name="models/embedding-001")

llm=Gemini()

Settings.llm=llm

Settings.embed_model=gemini_embedding_model

Settings.node_parser=SentenceSplitter(chunk_size=512,chunk_overlap=20)

Settings.num_output=2080

Settings.context_window=3900

Persist_dir="./storage"

if not os.path.exists(Persist_dir):

documents=SimpleDirectoryReader("doc").load_data()

index=VectorStoreIndex.from_documents(documents)

index.storage_context.persist(persist_dir=Persist_dir)

else:

storage_context=StorageContext.from_defaults(persist_dir=Persist_dir)

index= load_index_from_storage(storage_context=storage_context)

query_engine=index.as_query_engine()

strInput=""

while strInput!="exit":

if strInput!="":

response=query_engine.query(strInput)

print(response.response+"\n\n")

strInput=input("Ask the question: ")

In the above code, code highlighted in yellow is required for tell llamaindex about which LLM model to be used where the Settings object will be used to set the same. If we do not set it then llamaindex will use the default settings of OpenAI LLM model.

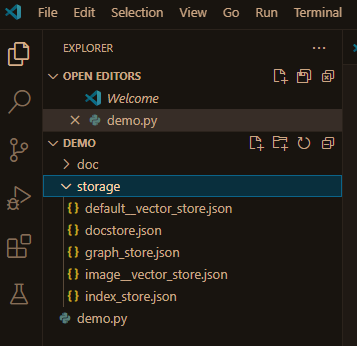

Code highlighted in green is required for embedding of the local documents reside inside the "doc" folder, after embedding the vector index will be stored inside the "storage" directory in Json files as shown in figure . if the "storage" directory exists then it wont do embedding again and load the embedding from vector index stored inside "storage" directory.

Code highlighted in blue is section of code where the application will get the input from user and search in vector store then call the LLM Gemini and get the results to print on screen. the output samples you can see in image below after running the application.

Comments

Post a Comment